Creating a sharded cluster

Example on GitHub: sharded_cluster

In this tutorial, you get a sharded cluster up and running on your local machine and learn how to manage the cluster using the tt utility. To enable sharding in the cluster, the vshard module is used.

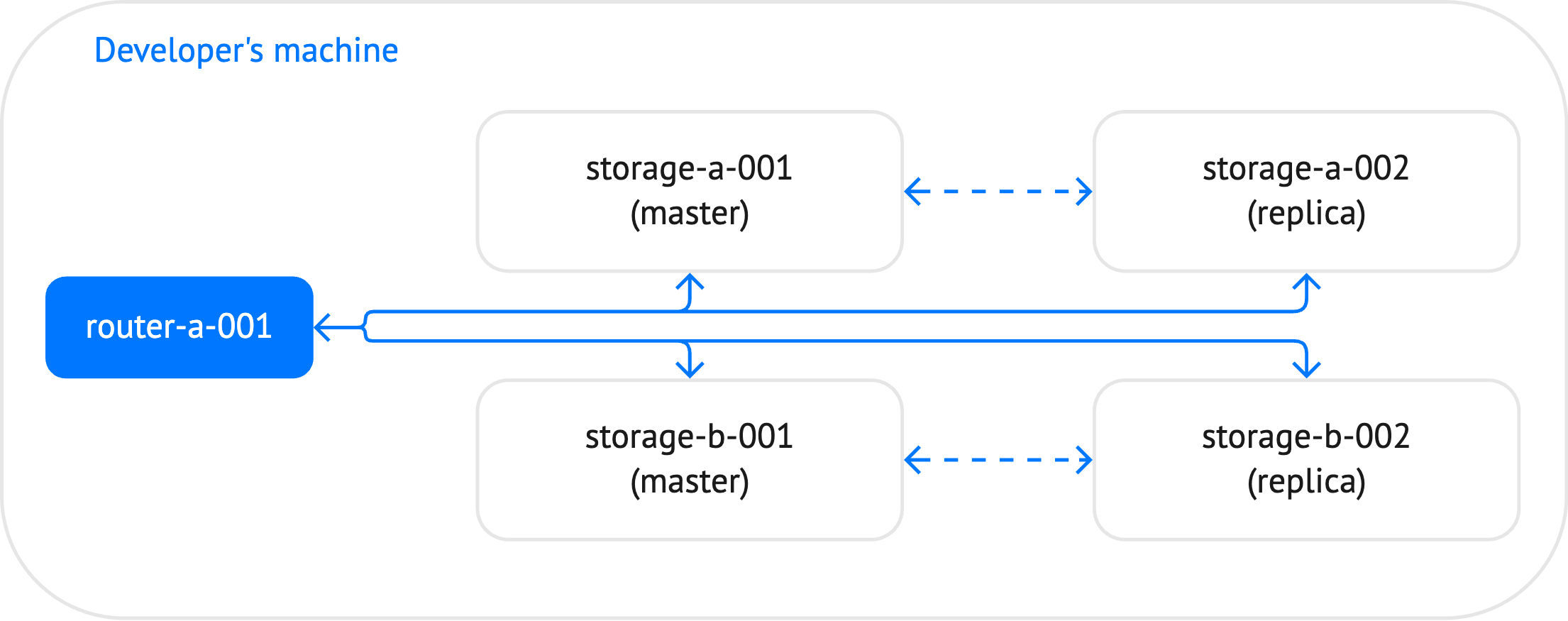

The cluster created in this tutorial includes 5 instances: one router and 4 storages, which constitute two replica sets.

Before starting this tutorial:

Install the tt utility.

-

Note

The tt utility provides the ability to install Tarantool software using the tt install command.

The tt create command can be used to create an application from a predefined or custom template.

For example, the built-in vshard_cluster template enables you to create a ready-to-run sharded cluster application.

In this tutorial, the application layout is prepared manually:

Create a tt environment in the current directory by executing the tt init command.

Inside the empty

instances.enableddirectory of the created tt environment, create thesharded_clusterdirectory.Inside

instances.enabled/sharded_cluster, create the following files:instances.ymlspecifies instances to run in the current environment.config.yamlspecifies the cluster’s configuration.storage.luacontains code specific for storages.router.luacontains code specific for a router.sharded_cluster-scm-1.rockspecspecifies external dependencies required by the application.

The next Developing the application section shows how to configure the cluster and write code for routing read and write requests to different storages.

Open the instances.yml file and add the following content:

storage-a-001:

storage-a-002:

storage-b-001:

storage-b-002:

router-a-001:

This file specifies instances to run in the current environment.

This section describes how to configure the cluster in the config.yaml file.

Add the credentials configuration section:

credentials:

users:

replicator:

password: 'topsecret'

roles: [replication]

storage:

password: 'secret'

roles: [sharding]

In this section, two users with the specified passwords are created:

- The

replicatoruser with thereplicationrole. - The

storageuser with theshardingrole.

These users are intended to maintain replication and sharding in the cluster.

Important

It is not recommended to store passwords as plain text in a YAML configuration. Learn how to load passwords from safe storage such as external files or environment variables from Loading secrets from safe storage.

Add the iproto.advertise section:

iproto:

advertise:

peer:

login: replicator

sharding:

login: storage

In this section, the following options are configured:

iproto.advertise.peerspecifies how to advertise the current instance to other cluster members. In particular, this option informs other replica set members that thereplicatoruser should be used to connect to the current instance.iproto.advertise.shardingspecifies how to advertise the current instance to a router and rebalancer.

Specify the total number of buckets in a sharded cluster using the sharding.bucket_count option:

sharding:

bucket_count: 1000

Define the cluster’s topology inside the groups section. The cluster includes two groups:

storagesincludes two replica sets. Each replica set contains two instances.routersincludes one router instance.

Here is a schematic view of the cluster’s topology:

groups:

storages:

replicasets:

storage-a:

# ...

storage-b:

# ...

routers:

replicasets:

router-a:

# ...

To configure storages, add the following code inside the

groupssection:storages: app: module: storage sharding: roles: [storage] replication: failover: manual replicasets: storage-a: leader: storage-a-001 instances: storage-a-001: iproto: listen: - uri: '127.0.0.1:3302' storage-a-002: iproto: listen: - uri: '127.0.0.1:3303' storage-b: leader: storage-b-001 instances: storage-b-001: iproto: listen: - uri: '127.0.0.1:3304' storage-b-002: iproto: listen: - uri: '127.0.0.1:3305'

The main group-level options here are:

app: Theapp.moduleoption specifies that code specific to storages should be loaded from thestoragemodule. This is explained below in the Adding storage code section.sharding: The sharding.roles option specifies that all instances inside this group act as storages. A rebalancer is selected automatically from two master instances.replication: The replication.failover option specifies that a leader in each replica set should be specified manually.replicasets: This section configures two replica sets that constitute cluster storages.

To configure a router, add the following code inside the

groupssection:routers: app: module: router sharding: roles: [router] replicasets: router-a: instances: router-a-001: iproto: listen: - uri: '127.0.0.1:3301'

The main group-level options here are:

app: Theapp.moduleoption specifies that code specific to a router should be loaded from theroutermodule. This is explained below in the Adding router code section.sharding: The sharding.roles option specifies that an instance inside this group acts as a router.replicasets: This section configures one replica set with one router instance.

The resulting config.yaml file should look as follows:

credentials:

users:

replicator:

password: 'topsecret'

roles: [replication]

storage:

password: 'secret'

roles: [sharding]

iproto:

advertise:

peer:

login: replicator

sharding:

login: storage

sharding:

bucket_count: 1000

groups:

storages:

app:

module: storage

sharding:

roles: [storage]

replication:

failover: manual

replicasets:

storage-a:

leader: storage-a-001

instances:

storage-a-001:

iproto:

listen:

- uri: '127.0.0.1:3302'

storage-a-002:

iproto:

listen:

- uri: '127.0.0.1:3303'

storage-b:

leader: storage-b-001

instances:

storage-b-001:

iproto:

listen:

- uri: '127.0.0.1:3304'

storage-b-002:

iproto:

listen:

- uri: '127.0.0.1:3305'

routers:

app:

module: router

sharding:

roles: [router]

replicasets:

router-a:

instances:

router-a-001:

iproto:

listen:

- uri: '127.0.0.1:3301'

Open the

storage.luafile and create a space using the box.schema.space.create() function:box.schema.create_space('bands', { format = { { name = 'id', type = 'unsigned' }, { name = 'bucket_id', type = 'unsigned' }, { name = 'band_name', type = 'string' }, { name = 'year', type = 'unsigned' } }, if_not_exists = true })

Note that the created

bandsspaces includes thebucket_idfield. This field represents a sharding key used to partition a dataset across different storage instances.Create two indexes based on the

idandbucket_idfields:box.space.bands:create_index('id', { parts = { 'id' }, if_not_exists = true }) box.space.bands:create_index('bucket_id', { parts = { 'bucket_id' }, unique = false, if_not_exists = true })

Define the

insert_bandfunction that inserts a tuple into the created space:function insert_band(id, bucket_id, band_name, year) box.space.bands:insert({ id, bucket_id, band_name, year }) end

Define the

get_bandfunction that returns data without thebucket_idvalue:function get_band(id) local tuple = box.space.bands:get(id) if tuple == nil then return nil end return { tuple.id, tuple.band_name, tuple.year } end

The resulting storage.lua file should look as follows:

box.schema.create_space('bands', {

format = {

{ name = 'id', type = 'unsigned' },

{ name = 'bucket_id', type = 'unsigned' },

{ name = 'band_name', type = 'string' },

{ name = 'year', type = 'unsigned' }

},

if_not_exists = true

})

box.space.bands:create_index('id', { parts = { 'id' }, if_not_exists = true })

box.space.bands:create_index('bucket_id', { parts = { 'bucket_id' }, unique = false, if_not_exists = true })

function insert_band(id, bucket_id, band_name, year)

box.space.bands:insert({ id, bucket_id, band_name, year })

end

function get_band(id)

local tuple = box.space.bands:get(id)

if tuple == nil then

return nil

end

return { tuple.id, tuple.band_name, tuple.year }

end

Open the

router.luafile and load thevshardmodule as follows:local vshard = require('vshard')

Define the

putfunction that specifies how the router selects the storage to write data:function put(id, band_name, year) local bucket_id = vshard.router.bucket_id_mpcrc32({ id }) vshard.router.callrw(bucket_id, 'insert_band', { id, bucket_id, band_name, year }) end

The following

vshardrouter functions are used:- vshard.router.bucket_id_mpcrc32(): Calculates a bucket ID value using a hash function.

- vshard.router.callrw(): Inserts a tuple to a storage identified the generated bucket ID.

Create the

getfunction for getting data:function get(id) local bucket_id = vshard.router.bucket_id_mpcrc32({ id }) return vshard.router.callro(bucket_id, 'get_band', { id }) end

Inside this function, vshard.router.callro() is called to get data from a storage identified the generated bucket ID.

Finally, create the

insert_data()function that inserts sample data into the created space:function insert_data() put(1, 'Roxette', 1986) put(2, 'Scorpions', 1965) put(3, 'Ace of Base', 1987) put(4, 'The Beatles', 1960) put(5, 'Pink Floyd', 1965) put(6, 'The Rolling Stones', 1962) put(7, 'The Doors', 1965) put(8, 'Nirvana', 1987) put(9, 'Led Zeppelin', 1968) put(10, 'Queen', 1970) end

The resulting router.lua file should look as follows:

local vshard = require('vshard')

function put(id, band_name, year)

local bucket_id = vshard.router.bucket_id_mpcrc32({ id })

vshard.router.callrw(bucket_id, 'insert_band', { id, bucket_id, band_name, year })

end

function get(id)

local bucket_id = vshard.router.bucket_id_mpcrc32({ id })

return vshard.router.callro(bucket_id, 'get_band', { id })

end

function insert_data()

put(1, 'Roxette', 1986)

put(2, 'Scorpions', 1965)

put(3, 'Ace of Base', 1987)

put(4, 'The Beatles', 1960)

put(5, 'Pink Floyd', 1965)

put(6, 'The Rolling Stones', 1962)

put(7, 'The Doors', 1965)

put(8, 'Nirvana', 1987)

put(9, 'Led Zeppelin', 1968)

put(10, 'Queen', 1970)

end

Open the sharded_cluster-scm-1.rockspec file and add the following content:

package = 'sharded_cluster'

version = 'scm-1'

source = {

url = '/dev/null',

}

dependencies = {

'vshard == 0.1.26'

}

build = {

type = 'none';

}

The dependencies section includes the specified version of the vshard module.

To install dependencies, you need to build the application.

In the terminal, open the tt environment directory.

Then, execute the tt build command:

$ tt build sharded_cluster

• Running rocks make

No existing manifest. Attempting to rebuild...

• Application was successfully built

This installs the vshard dependency defined in the *.rockspec file to the .rocks directory.

To start all instances in the cluster, execute the tt start command:

$ tt start sharded_cluster

• Starting an instance [sharded_cluster:storage-a-001]...

• Starting an instance [sharded_cluster:storage-a-002]...

• Starting an instance [sharded_cluster:storage-b-001]...

• Starting an instance [sharded_cluster:storage-b-002]...

• Starting an instance [sharded_cluster:router-a-001]...

After starting instances, you need to bootstrap the cluster as follows:

Connect to the router instance using

tt connect:$ tt connect sharded_cluster:router-a-001 • Connecting to the instance... • Connected to sharded_cluster:router-a-001

Call vshard.router.bootstrap() to perform the initial cluster bootstrap:

sharded_cluster:router-a-001> vshard.router.bootstrap() --- - true ...

To check the cluster’s status, execute vshard.router.info() on the router:

sharded_cluster:router-a-001> vshard.router.info()

---

- replicasets:

storage-b:

replica:

network_timeout: 0.5

status: available

uri: storage@127.0.0.1:3305

name: storage-b-002

bucket:

available_rw: 500

master:

network_timeout: 0.5

status: available

uri: storage@127.0.0.1:3304

name: storage-b-001

name: storage-b

storage-a:

replica:

network_timeout: 0.5

status: available

uri: storage@127.0.0.1:3303

name: storage-a-002

bucket:

available_rw: 500

master:

network_timeout: 0.5

status: available

uri: storage@127.0.0.1:3302

name: storage-a-001

name: storage-a

bucket:

unreachable: 0

available_ro: 0

unknown: 0

available_rw: 1000

status: 0

alerts: []

...

The output includes the following sections:

replicasets: contains information about storages and their availability.bucket: displays the total number of read-write and read-only buckets that are currently available for this router.status: the number from 0 to 3 that indicates whether there are any issues with the cluster. 0 means that there are no issues.alerts: might describe the exact issues related to bootstrapping a cluster, for example, connection issues, failover events, or unidentified buckets.

To insert sample data, call the insert_data() function on the router:

sharded_cluster:router-a-001> insert_data() --- ...

Calling this function distributes data evenly across the cluster’s nodes.

To get a tuple by the specified ID, call the

get()function:sharded_cluster:router-a-001> get(4) --- - [4, 'The Beatles', 1960] ...

To insert a new tuple, call the

put()function:sharded_cluster:router-a-001> put(11, 'The Who', 1962) --- ...

To check how data is distributed across the cluster’s nodes, follow the steps below:

Connect to any storage in the

storage-areplica set:$ tt connect sharded_cluster:storage-a-001 • Connecting to the instance... • Connected to sharded_cluster:storage-a-001

Then, select all tuples in the

bandsspace:sharded_cluster:storage-a-001> box.space.bands:select() --- - - [3, 11, 'Ace of Base', 1987] - [4, 42, 'The Beatles', 1960] - [6, 55, 'The Rolling Stones', 1962] - [9, 299, 'Led Zeppelin', 1968] - [10, 167, 'Queen', 1970] - [11, 70, 'The Who', 1962] ...

Connect to any storage in the

storage-breplica set:$ tt connect sharded_cluster:storage-b-001 • Connecting to the instance... • Connected to sharded_cluster:storage-b-001

Select all tuples in the

bandsspace to make sure it contains another subset of data:sharded_cluster:storage-b-001> box.space.bands:select() --- - - [1, 614, 'Roxette', 1986] - [2, 986, 'Scorpions', 1965] - [5, 755, 'Pink Floyd', 1965] - [7, 998, 'The Doors', 1965] - [8, 762, 'Nirvana', 1987] ...