Master-master

Example on GitHub: master_master

This tutorial shows how to configure and work with a master-master replica set.

Before starting this tutorial:

Install the tt utility.

Create a tt environment in the current directory by executing the tt init command.

Inside the

instances.enableddirectory of the created tt environment, create themaster_masterdirectory.Inside

instances.enabled/master_master, create theinstances.ymlandconfig.yamlfiles:instances.ymlspecifies instances to run in the current environment and should look like this:instance001: instance002:

The

config.yamlfile is intended to store a replica set configuration.

This section describes how to configure a replica set in config.yaml.

Define a replica set topology inside the groups section:

- The

database.modeoption should be set torwto make instances work in read-write mode. - The iproto.listen option specifies an address used to listen for incoming requests and allows replicas to communicate with each other.

groups:

group001:

replicasets:

replicaset001:

instances:

instance001:

database:

mode: rw

iproto:

listen:

- uri: '127.0.0.1:3301'

instance002:

database:

mode: rw

iproto:

listen:

- uri: '127.0.0.1:3302'

In the credentials section, create the replicator user with the replication role:

credentials:

users:

replicator:

password: 'topsecret'

roles: [replication]

Set iproto.advertise.peer to advertise the current instance to other replica set members:

iproto:

advertise:

peer:

login: replicator

The resulting replica set configuration should look as follows:

credentials:

users:

replicator:

password: 'topsecret'

roles: [replication]

iproto:

advertise:

peer:

login: replicator

replication:

failover: off

groups:

group001:

replicasets:

replicaset001:

instances:

instance001:

database:

mode: rw

iproto:

listen:

- uri: '127.0.0.1:3301'

instance002:

database:

mode: rw

iproto:

listen:

- uri: '127.0.0.1:3302'

After configuring a replica set, execute the tt start command from the tt environment directory:

$ tt start master_master • Starting an instance [master_master:instance001]... • Starting an instance [master_master:instance002]...

Check that instances are in the

RUNNINGstatus using the tt status command:$ tt status master_master INSTANCE STATUS PID master_master:instance001 RUNNING 30818 master_master:instance002 RUNNING 30819

Connect to both instances using tt connect. Below is the example for

instance001:$ tt connect master_master:instance001 • Connecting to the instance... • Connected to master_master:instance001

Check that both instances are writable using

box.info.ro:instance001:master_master:instance001> box.info.ro --- - false ...

instance002:master_master:instance002> box.info.ro --- - false ...

Execute

box.info.replicationto check a replica set status. Forinstance002,upstream.statusanddownstream.statusshould befollow.master_master:instance001> box.info.replication --- - 1: id: 1 uuid: 4cfa6e3c-625e-b027-00a7-29b2f2182f23 lsn: 7 upstream: status: follow idle: 0.21281599999929 peer: replicator@127.0.0.1:3302 lag: 0.00031614303588867 name: instance002 downstream: status: follow idle: 0.21800899999653 vclock: {1: 7} lag: 0 2: id: 2 uuid: 9bb111c2-3ff5-36a7-00f4-2b9a573ea660 lsn: 0 name: instance001 ...

To see the diagrams that illustrate how the

upstreamanddownstreamconnections look, refer to Monitoring a replica set.

Note

Note that a

vclockvalue might include the0component that is related to local space operations and might differ for different instances in a replica set.

To check that both instances get updates from each other, follow the steps below:

On

instance001, create a space, format it, and create a primary index:box.schema.space.create('bands') box.space.bands:format({ { name = 'id', type = 'unsigned' }, { name = 'band_name', type = 'string' }, { name = 'year', type = 'unsigned' } }) box.space.bands:create_index('primary', { parts = { 'id' } })

Then, add sample data to this space:

box.space.bands:insert { 1, 'Roxette', 1986 } box.space.bands:insert { 2, 'Scorpions', 1965 }

On

instance002, use theselectoperation to make sure data is replicated:master_master:instance002> box.space.bands:select() --- - - [1, 'Roxette', 1986] - [2, 'Scorpions', 1965] ...

Add more data to the created space on

instance002:box.space.bands:insert { 3, 'Ace of Base', 1987 } box.space.bands:insert { 4, 'The Beatles', 1960 }

Get back to

instance001and useselectto make sure new records are replicated.Check that box.info.vclock values are the same on both instances:

instance001:master_master:instance001> box.info.vclock --- - {2: 5, 1: 9} ...

instance002:master_master:instance002> box.info.vclock --- - {2: 5, 1: 9} ...

Note

To learn how to fix and prevent replication conflicts using trigger functions, see Resolving replication conflicts.

To insert conflicting records to instance001 and instance002, follow the steps below:

Stop

instance001using thett stopcommand:$ tt stop master_master:instance001

On

instance002, insert a new record:box.space.bands:insert { 5, 'incorrect data', 0 }

Stop

instance002usingtt stop:$ tt stop master_master:instance002

Start

instance001back:$ tt start master_master:instance001

Connect to

instance001and insert a record that should conflict with a record already inserted oninstance002:box.space.bands:insert { 5, 'Pink Floyd', 1965 }

Start

instance002back:$ tt start master_master:instance002

Then, check

box.info.replicationoninstance001.upstream.statusshould bestoppedbecause of theDuplicate key existserror:master_master:instance001> box.info.replication --- - 1: id: 1 uuid: 4cfa6e3c-625e-b027-00a7-29b2f2182f23 lsn: 9 upstream: peer: replicator@127.0.0.1:3302 lag: 143.52251672745 status: stopped idle: 3.9462469999999 message: Duplicate key exists in unique index "primary" in space "bands" with old tuple - [5, "Pink Floyd", 1965] and new tuple - [5, "incorrect data", 0] name: instance002 downstream: status: stopped message: 'unexpected EOF when reading from socket, called on fd 12, aka 127.0.0.1:3301, peer of 127.0.0.1:59258: Broken pipe' system_message: Broken pipe 2: id: 2 uuid: 9bb111c2-3ff5-36a7-00f4-2b9a573ea660 lsn: 6 name: instance001 ...

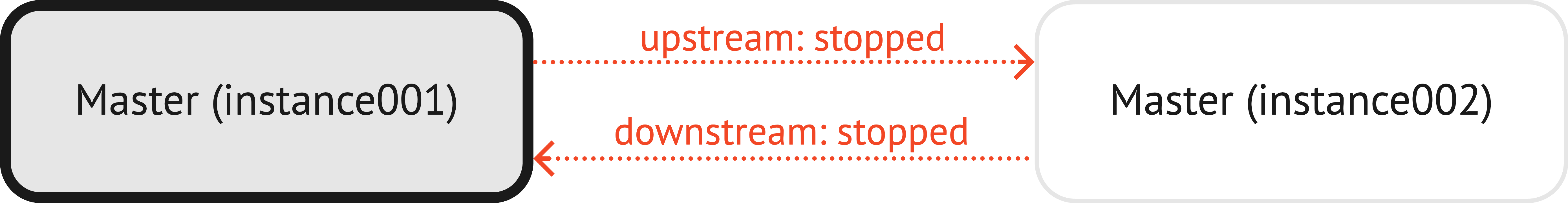

The diagram below illustrates how the

upstreamanddownstreamconnections look like:

To resolve a replication conflict, instance002 should get the correct data from instance001 first.

To achieve this, instance002 should be rebootstrapped:

In the

config.yamlfile, changedatabase.modeofinstance002toro:instance002: database: mode: ro

Reload configurations on both instances using the

reload()function provided by the config module:instance001:master_master:instance001> require('config'):reload() --- ...

instance002:master_master:instance002> require('config'):reload() --- ...

Delete write-ahead logs and snapshots stored in the

var/lib/instance002directory.Note

var/libis the default directory used by tt to store write-ahead logs and snapshots. Learn more from Configuration.Restart

instance002using the tt restart command:$ tt restart master_master:instance002

Connect to

instance002and make sure it received the correct data frominstance001:master_master:instance002> box.space.bands:select() --- - - [1, 'Roxette', 1986] - [2, 'Scorpions', 1965] - [3, 'Ace of Base', 1987] - [4, 'The Beatles', 1960] - [5, 'Pink Floyd', 1965] ...

After reseeding a replica, you need to resolve a replication conflict that keeps replication stopped:

Execute

box.info.replicationoninstance001.upstream.statusis still stopped:master_master:instance001> box.info.replication --- - 1: id: 1 uuid: 4cfa6e3c-625e-b027-00a7-29b2f2182f23 lsn: 9 upstream: peer: replicator@127.0.0.1:3302 lag: 143.52251672745 status: stopped idle: 1309.943383 message: Duplicate key exists in unique index "primary" in space "bands" with old tuple - [5, "Pink Floyd", 1965] and new tuple - [5, "incorrect data", 0] name: instance002 downstream: status: follow idle: 0.47881799999959 vclock: {2: 6, 1: 9} lag: 0 2: id: 2 uuid: 9bb111c2-3ff5-36a7-00f4-2b9a573ea660 lsn: 6 name: instance001 ...

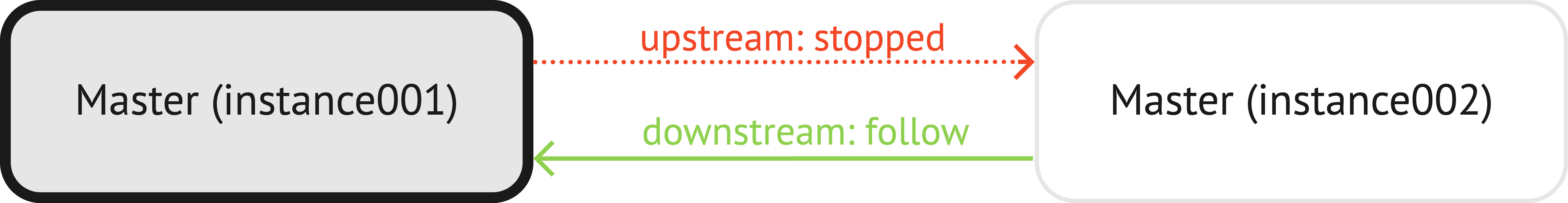

The diagram below illustrates how the

upstreamanddownstreamconnections look like:

In the

config.yamlfile, clear theiprotooption forinstance001by setting its value to{}to disconnect this instance frominstance002. Setdatabase.modetoro:instance001: database: mode: ro iproto: {}

Reload configuration on

instance001only:master_master:instance001> require('config'):reload() --- ...

Change

database.modevalues back torwfor both instances and restoreiproto.listenforinstance001:instance001: database: mode: rw iproto: listen: - uri: '127.0.0.1:3301' instance002: database: mode: rw iproto: listen: - uri: '127.0.0.1:3302'

Reload configurations on both instances one more time:

instance001:master_master:instance001> require('config'):reload() --- ...

instance002:master_master:instance002> require('config'):reload() --- ...

Check

box.info.replication.upstream.statusbefollownow.master_master:instance001> box.info.replication --- - 1: id: 1 uuid: 4cfa6e3c-625e-b027-00a7-29b2f2182f23 lsn: 9 upstream: status: follow idle: 0.21281300000192 peer: replicator@127.0.0.1:3302 lag: 0.00031113624572754 name: instance002 downstream: status: follow idle: 0.035179000002245 vclock: {2: 6, 1: 9} lag: 0 2: id: 2 uuid: 9bb111c2-3ff5-36a7-00f4-2b9a573ea660 lsn: 6 name: instance001 ...

The process of adding instances to a replica set and removing them is similar for all failover modes. Learn how to do this from the Master-replica: manual failover tutorial:

Before removing an instance from a replica set with replication.failover set to off, make sure this instance is in read-only mode.